微服务模式¶

前面我们提到了 Loki 部署的单体模式和读写分离两种模式,当你的每天日志规模超过了 TB 的量级,那么可能我们就需要使用到微服务模式来部署 Loki 了。

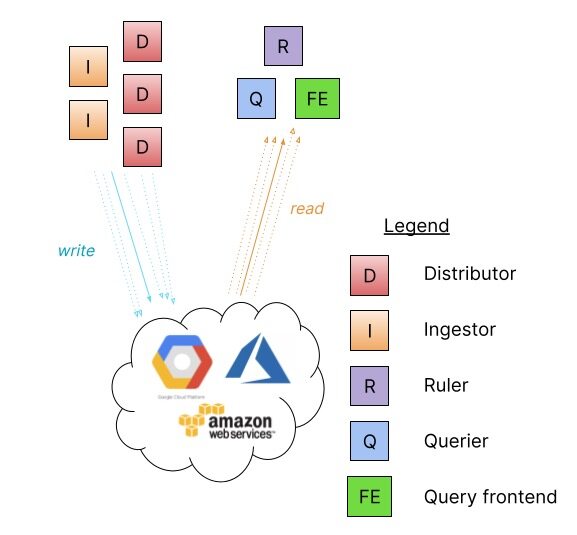

微服务部署模式将 Loki 的组件实例化为不同的进程,每个进程都被调用并指定其目标,每个组件都会产生一个用于内部请求的 gRPC 服务器和一个用于外部 API 请求的 HTTP 服务。

- ingester

- distributor

- query-frontend

- query-scheduler

- querier

- index-gateway

- ruler

- compactor

将组件作为单独的微服务运行允许通过增加微服务的数量来进行扩展,定制的集群对各个组件具有更好的可观察性。微服务模式部署是最高效的 Loki 安装,但是,它们的设置和维护也是最复杂的。

对于超大的 Loki 集群或需要对扩展和集群操作进行更多控制的集群,建议使用微服务模式。

微服务模式最适合在 Kubernetes 集群中部署,提供了 Jsonnet 和 Helm Chart 两种安装方式。

安装¶

同样这里我们还是使用 Helm Chart 的方式来安装微服务模式的 Loki,在安装之前记得将前面章节安装的 Loki 相关服务删除。

首先获取微服务模式的 Chart 包:

$ helm repo add grafana https://grafana.github.io/helm-charts

$ helm pull grafana/loki-distributed --untar --version 0.48.4

$ cd loki-simple-scalable

该 Chart 包支持下表中显示的组件,Ingester、distributor、querier 和 query-frontend 组件是始终安装的,其他组件是可选的。

| 组件 | 可选 | 默认开启? |

|---|---|---|

| gateway | ✅ | ✅ |

| ingester | ❎ | n/a |

| distributor | ❎ | n/a |

| querier | ❎ | n/a |

| query-frontend | ❎ | n/a |

| table-manager | ✅ | ❎ |

| compactor | ✅ | ❎ |

| ruler | ✅ | ❎ |

| index-gateway | ✅ | ❎ |

| memcached-chunks | ✅ | ❎ |

| memcached-frontend | ✅ | ❎ |

| memcached-index-queries | ✅ | ❎ |

| memcached-index-writes | ✅ | ❎ |

该 Chart 包在微服务模式下配置 Loki,已经过测试,可以与 boltdb-shipper 和 memberlist 一起使用,而其他存储和发现选项也可以使用,但是,该图表不支持设置 Consul 或 Etcd 以进行发现,它们需要进行单独配置,相反,可以使用不需要单独的键/值存储的 memberlist。默认情况下该 Chart 包会为成员列表创建了一个 Headless Service,ingester、distributor、querier 和 ruler 是其中的一部分。

安装 minio¶

比如我们这里使用 memberlist、boltdb-shipper 和 minio 来作存储,由于这个 Chart 包没有包含 minio,所以需要我们先单独安装 minio:

$ helm repo add minio https://helm.min.io/

$ helm pull minio/minio --untar --version 8.0.10

$ cd minio

创建一个如下所示的 values 文件:

# ci/loki-values.yaml

accessKey: "myaccessKey"

secretKey: "mysecretKey"

persistence:

enabled: true

storageClass: "local-path"

accessMode: ReadWriteOnce

size: 5Gi

service:

type: NodePort

port: 9000

nodePort: 32000

resources:

requests:

memory: 1Gi

直接使用上面配置的 values 文件安装 minio:

$ helm upgrade --install minio -n logging -f ci/loki-values.yaml .

Release "minio" does not exist. Installing it now.

NAME: minio

LAST DEPLOYED: Sun Jun 19 16:56:28 2022

NAMESPACE: logging

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Minio can be accessed via port 9000 on the following DNS name from within your cluster:

minio.logging.svc.cluster.local

To access Minio from localhost, run the below commands:

1. export POD_NAME=$(kubectl get pods --namespace logging -l "release=minio" -o jsonpath="{.items[0].metadata.name}")

2. kubectl port-forward $POD_NAME 9000 --namespace logging

Read more about port forwarding here: http://kubernetes.io/docs/user-guide/kubectl/kubectl_port-forward/

You can now access Minio server on http://localhost:9000. Follow the below steps to connect to Minio server with mc client:

1. Download the Minio mc client - https://docs.minio.io/docs/minio-client-quickstart-guide

2. Get the ACCESS_KEY=$(kubectl get secret minio -o jsonpath="{.data.accesskey}" | base64 --decode) and the SECRET_KEY=$(kubectl get secret minio -o jsonpath="{.data.secretkey}" | base64 --decode)

3. mc alias set minio-local http://localhost:9000 "$ACCESS_KEY" "$SECRET_KEY" --api s3v4

4. mc ls minio-local

Alternately, you can use your browser or the Minio SDK to access the server - https://docs.minio.io/categories/17

安装完成后查看对应的 Pod 状态:

$ kubectl get pods -n logging

NAME READY STATUS RESTARTS AGE

minio-548656f786-gctk9 1/1 Running 0 2m45s

$ kubectl get svc -n logging

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

minio NodePort 10.111.58.196 <none> 9000:32000/TCP 3h16m

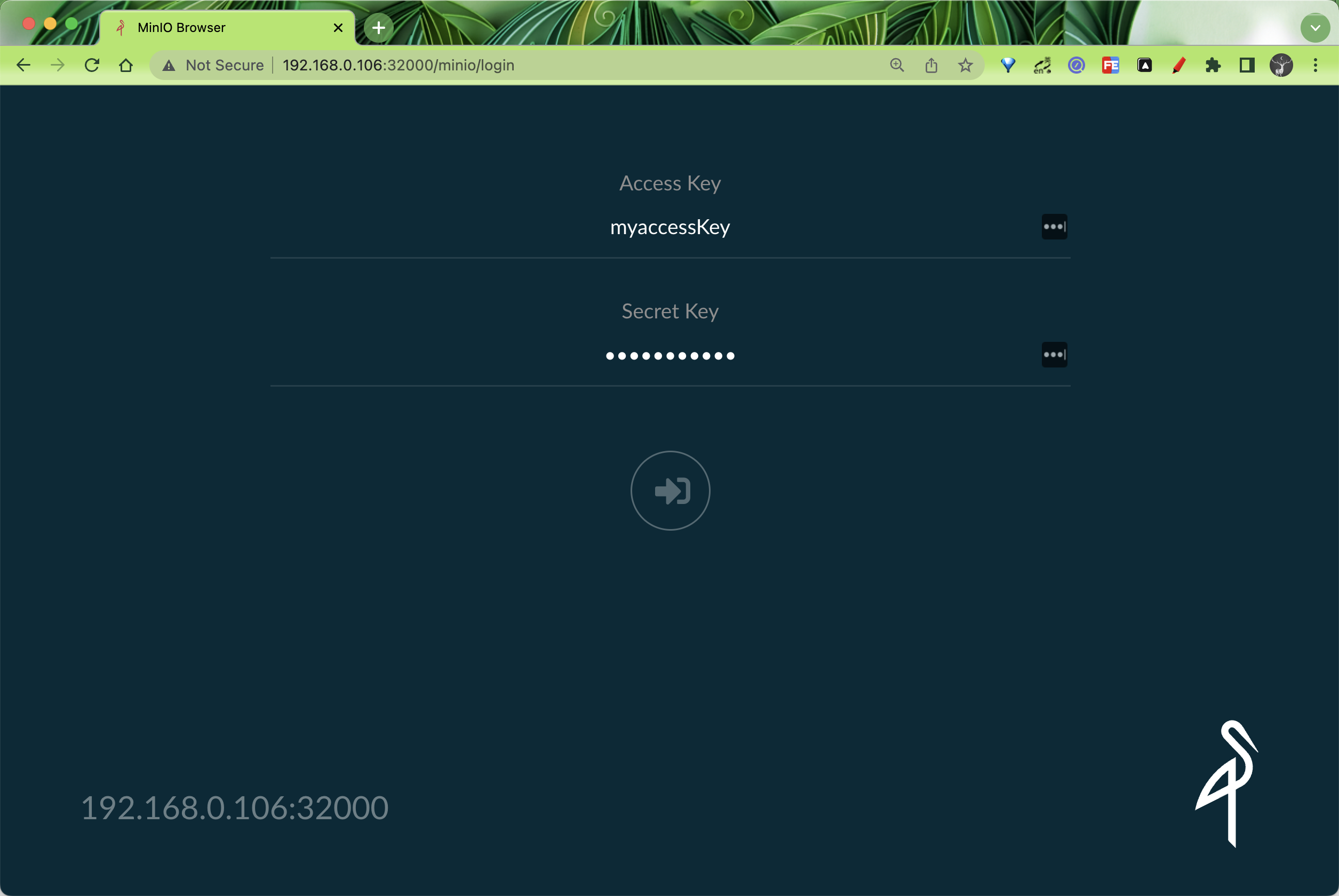

可以通过指定的 32000 端口来访问 minio:

然后记得创建一个名为 loki-data 的 bucket。

安装 Loki¶

现在将我们的对象存储准备好后,接下来我们来安装微服务模式的 Loki,首先创建一个如下所示的 values 文件:

# ci/minio-values.yaml

loki:

structuredConfig:

ingester:

max_transfer_retries: 0

chunk_idle_period: 1h

chunk_target_size: 1536000

max_chunk_age: 1h

storage_config:

aws:

endpoint: minio.logging.svc.cluster.local:9000

insecure: true

bucketnames: loki-data

access_key_id: myaccessKey

secret_access_key: mysecretKey

s3forcepathstyle: true

boltdb_shipper:

shared_store: s3

schema_config:

configs:

- from: 2022-06-21

store: boltdb-shipper

object_store: s3

schema: v12

index:

prefix: loki_index_

period: 24h

distributor:

replicas: 2

ingester:

replicas: 2

persistence:

enabled: true

size: 1Gi

storageClass: local-path

querier:

replicas: 2

persistence:

enabled: true

size: 1Gi

storageClass: local-path

queryFrontend:

replicas: 2

gateway:

nginxConfig:

httpSnippet: |-

client_max_body_size 100M;

serverSnippet: |-

client_max_body_size 100M;

上述配置会选择性地覆盖 loki.config 模板文件中的默认值,使用 loki.structuredConfig 可以在外部设置大多数配置参数。loki.config、loki.schemaConfig 和 loki.storageConfig 也可以与 loki.structuredConfig 结合使用。 loki.structuredConfig 中的值优先级更高。

这里我们通过 loki.structuredConfig.storage_config.aws 指定了用于保存数据的 minio 配置,为了高可用,核心的几个组件我们配置了 2 个副本,ingester 和 querier 配置了持久化存储。

现在使用上面的 values 文件进行一键安装:

$ helm upgrade --install loki -n logging -f ci/minio-values.yaml .

Release "loki" does not exist. Installing it now.

NAME: loki

LAST DEPLOYED: Tue Jun 21 16:20:10 2022

NAMESPACE: logging

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

***********************************************************************

Welcome to Grafana Loki

Chart version: 0.48.4

Loki version: 2.5.0

***********************************************************************

Installed components:

* gateway

* ingester

* distributor

* querier

* query-frontend

上面会分别安装几个组件:gateway、ingester、distributor、querier、query-frontend,对应的 Pod 状态如下所示:

$ kubectl get pods -n logging

NAME READY STATUS RESTARTS AGE

loki-loki-distributed-distributor-5dfdd5bd78-nxdq8 1/1 Running 0 2m40s

loki-loki-distributed-distributor-5dfdd5bd78-rh4gz 1/1 Running 0 116s

loki-loki-distributed-gateway-6f4cfd898c-hpszv 1/1 Running 0 21m

loki-loki-distributed-ingester-0 1/1 Running 0 96s

loki-loki-distributed-ingester-1 1/1 Running 0 2m38s

loki-loki-distributed-querier-0 1/1 Running 0 2m2s

loki-loki-distributed-querier-1 1/1 Running 0 2m33s

loki-loki-distributed-query-frontend-6d9845cb5b-p4vns 1/1 Running 0 4s

loki-loki-distributed-query-frontend-6d9845cb5b-sq5hr 1/1 Running 0 2m40s

minio-548656f786-gctk9 1/1 Running 1 (123m ago) 47h

$ kubectl get svc -n logging

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

loki-loki-distributed-distributor ClusterIP 10.102.156.127 <none> 3100/TCP,9095/TCP 22m

loki-loki-distributed-gateway ClusterIP 10.111.73.138 <none> 80/TCP 22m

loki-loki-distributed-ingester ClusterIP 10.98.238.236 <none> 3100/TCP,9095/TCP 22m

loki-loki-distributed-ingester-headless ClusterIP None <none> 3100/TCP,9095/TCP 22m

loki-loki-distributed-memberlist ClusterIP None <none> 7946/TCP 22m

loki-loki-distributed-querier ClusterIP 10.101.117.137 <none> 3100/TCP,9095/TCP 22m

loki-loki-distributed-querier-headless ClusterIP None <none> 3100/TCP,9095/TCP 22m

loki-loki-distributed-query-frontend ClusterIP None <none> 3100/TCP,9095/TCP,9096/TCP 22m

minio NodePort 10.111.58.196 <none> 9000:32000/TCP 47h

Loki 对应的配置文件如下所示:

$ kubectl get cm -n logging loki-loki-distributed -o yaml

apiVersion: v1

data:

config.yaml: |

auth_enabled: false

chunk_store_config:

max_look_back_period: 0s

compactor:

shared_store: filesystem

distributor:

ring:

kvstore:

store: memberlist

frontend:

compress_responses: true

log_queries_longer_than: 5s

tail_proxy_url: http://loki-loki-distributed-querier:3100

frontend_worker:

frontend_address: loki-loki-distributed-query-frontend:9095

ingester:

chunk_block_size: 262144

chunk_encoding: snappy

chunk_idle_period: 1h

chunk_retain_period: 1m

chunk_target_size: 1536000

lifecycler:

ring:

kvstore:

store: memberlist

replication_factor: 1

max_chunk_age: 1h

max_transfer_retries: 0

wal:

dir: /var/loki/wal

limits_config:

enforce_metric_name: false

max_cache_freshness_per_query: 10m

reject_old_samples: true

reject_old_samples_max_age: 168h

split_queries_by_interval: 15m

memberlist:

join_members:

- loki-loki-distributed-memberlist

query_range:

align_queries_with_step: true

cache_results: true

max_retries: 5

results_cache:

cache:

enable_fifocache: true

fifocache:

max_size_items: 1024

validity: 24h

ruler:

alertmanager_url: https://alertmanager.xx

external_url: https://alertmanager.xx

ring:

kvstore:

store: memberlist

rule_path: /tmp/loki/scratch

storage:

local:

directory: /etc/loki/rules

type: local

schema_config:

configs:

- from: "2022-06-21"

index:

period: 24h

prefix: loki_index_

object_store: s3

schema: v12

store: boltdb-shipper

server:

http_listen_port: 3100

storage_config:

aws:

access_key_id: myaccessKey

bucketnames: loki-data

endpoint: minio.logging.svc.cluster.local:9000

insecure: true

s3forcepathstyle: true

secret_access_key: mysecretKey

boltdb_shipper:

active_index_directory: /var/loki/index

cache_location: /var/loki/cache

cache_ttl: 168h

shared_store: s3

filesystem:

directory: /var/loki/chunks

table_manager:

retention_deletes_enabled: false

retention_period: 0s

kind: ConfigMap

# ......

同样其中有一个 gateway 组件会来帮助我们将请求路由到正确的组件中去,该组件同样就是一个 nginx 服务,对应的配置如下所示:

$ kubectl -n logging exec -it loki-loki-distributed-gateway-6f4cfd898c-hpszv -- cat /etc/nginx/nginx.conf

worker_processes 5; ## Default: 1

error_log /dev/stderr;

pid /tmp/nginx.pid;

worker_rlimit_nofile 8192;

events {

worker_connections 4096; ## Default: 1024

}

http {

client_body_temp_path /tmp/client_temp;

proxy_temp_path /tmp/proxy_temp_path;

fastcgi_temp_path /tmp/fastcgi_temp;

uwsgi_temp_path /tmp/uwsgi_temp;

scgi_temp_path /tmp/scgi_temp;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] $status '

'"$request" $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /dev/stderr main;

sendfile on;

tcp_nopush on;

resolver kube-dns.kube-system.svc.cluster.local;

client_max_body_size 100M;

server {

listen 8080;

location = / {

return 200 'OK';

auth_basic off;

}

location = /api/prom/push {

proxy_pass http://loki-loki-distributed-distributor.logging.svc.cluster.local:3100$request_uri;

}

location = /api/prom/tail {

proxy_pass http://loki-loki-distributed-querier.logging.svc.cluster.local:3100$request_uri;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

}

# Ruler

location ~ /prometheus/api/v1/alerts.* {

proxy_pass http://loki-loki-distributed-ruler.logging.svc.cluster.local:3100$request_uri;

}

location ~ /prometheus/api/v1/rules.* {

proxy_pass http://loki-loki-distributed-ruler.logging.svc.cluster.local:3100$request_uri;

}

location ~ /api/prom/rules.* {

proxy_pass http://loki-loki-distributed-ruler.logging.svc.cluster.local:3100$request_uri;

}

location ~ /api/prom/alerts.* {

proxy_pass http://loki-loki-distributed-ruler.logging.svc.cluster.local:3100$request_uri;

}

location ~ /api/prom/.* {

proxy_pass http://loki-loki-distributed-query-frontend.logging.svc.cluster.local:3100$request_uri;

}

location = /loki/api/v1/push {

proxy_pass http://loki-loki-distributed-distributor.logging.svc.cluster.local:3100$request_uri;

}

location = /loki/api/v1/tail {

proxy_pass http://loki-loki-distributed-querier.logging.svc.cluster.local:3100$request_uri;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

}

location ~ /loki/api/.* {

proxy_pass http://loki-loki-distributed-query-frontend.logging.svc.cluster.local:3100$request_uri;

}

client_max_body_size 100M;

}

}

从上面配置可以看出对应的 Push 端点 /api/prom/push 与 /loki/api/v1/push 会转发给 http://loki-loki-distributed-distributor.logging.svc.cluster.local:3100$request_uri;,也就是对应的 distributor 服务:

$ kubectl get pods -n logging -l app.kubernetes.io/component=distributor,app.kubernetes.io/instance=loki,app.kubernetes.io/name=loki-distributed

NAME READY STATUS RESTARTS AGE

loki-loki-distributed-distributor-5dfdd5bd78-nxdq8 1/1 Running 0 8m20s

loki-loki-distributed-distributor-5dfdd5bd78-rh4gz 1/1 Running 0 7m36s

所以如果我们要写入日志数据,自然现在是写入到 gateway 的 Push 端点上去。为了验证应用是否正常,接下来我们再安装 Promtail 和 Grafana 来进行数据的读写。

安装 Promtail¶

获取 promtail 的 Chart 包并解压:

$ helm pull grafana/promtail --untar

$ cd promtail

创建一个如下所示的 values 文件:

# ci/minio-values.yaml

rbac:

pspEnabled: false

config:

clients:

- url: http://loki-loki-distributed-gateway/loki/api/v1/push

注意我们需要将 Promtail 中配置的 Loki 地址为 http://loki-loki-distributed-gateway/loki/api/v1/push,这样就是 Promtail 将日志数据首先发送到 gateway 上面去,然后 gateway 根据我们的 Endpoints 去转发给 write 节点,使用上面的 values 文件来安装 Promtail:

$ helm upgrade --install promtail -n logging -f ci/minio-values.yaml .

Release "promtail" does not exist. Installing it now.

NAME: promtail

LAST DEPLOYED: Tue Jun 21 16:31:34 2022

NAMESPACE: logging

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

***********************************************************************

Welcome to Grafana Promtail

Chart version: 5.1.0

Promtail version: 2.5.0

***********************************************************************

Verify the application is working by running these commands:

* kubectl --namespace logging port-forward daemonset/promtail 3101

* curl http://127.0.0.1:3101/metrics

正常安装完成后会在每个节点上运行一个 promtail:

$ kubectl get pods -n logging -l app.kubernetes.io/name=promtail

NAME READY STATUS RESTARTS AGE

promtail-gbjzs 1/1 Running 0 38s

promtail-gjn5p 1/1 Running 0 38s

promtail-z6vhd 1/1 Running 0 38s

正常 promtail 就已经在开始采集所在节点上的所有容器日志了,然后将日志数据 Push 给 gateway,gateway 转发给 write 节点,我们可以查看 gateway 的日志:

$ kubectl logs -f loki-loki-distributed-gateway-6f4cfd898c-hpszv -n logging

10.244.2.26 - - [21/Jun/2022:08:41:24 +0000] 204 "POST /loki/api/v1/push HTTP/1.1" 0 "-" "promtail/2.5.0" "-"

10.244.2.1 - - [21/Jun/2022:08:41:24 +0000] 200 "GET / HTTP/1.1" 2 "-" "kube-probe/1.22" "-"

10.244.2.26 - - [21/Jun/2022:08:41:25 +0000] 204 "POST /loki/api/v1/push HTTP/1.1" 0 "-" "promtail/2.5.0" "-"

10.244.1.28 - - [21/Jun/2022:08:41:26 +0000] 204 "POST /loki/api/v1/push HTTP/1.1" 0 "-" "promtail/2.5.0" "-"

......

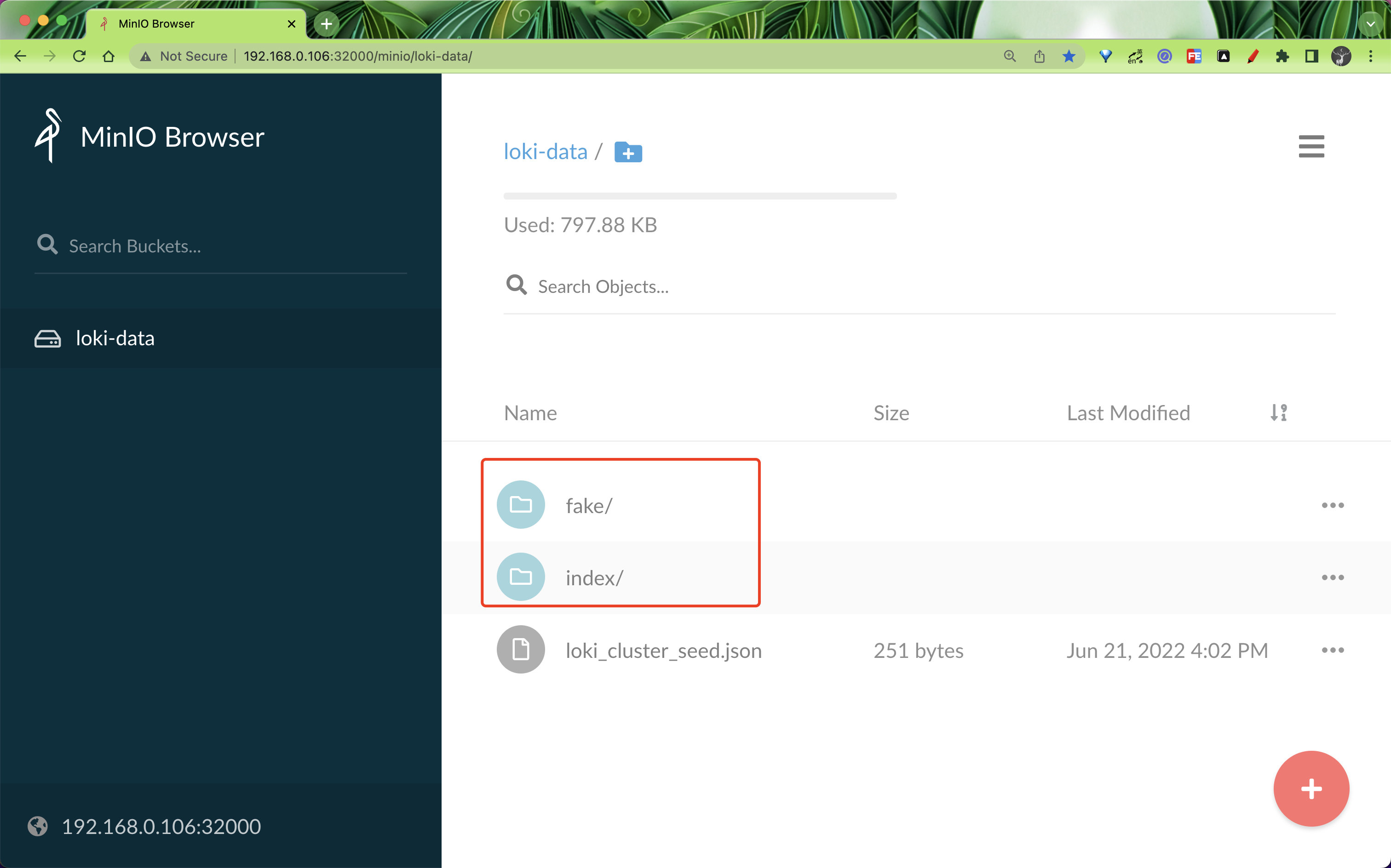

可以看到 gateway 现在在一直接接收着 /loki/api/v1/push 的请求,也就是 promtail 发送过来的,正常来说现在日志数据已经分发给 write 节点了,write 节点将数据存储在了 minio 中,可以去查看下 minio 中已经有日志数据了,前面安装的时候为 minio 服务指定了一个 32000 的 NodePort 端口:

到这里可以看到数据已经可以正常写入了。

安装 Grafana¶

下面我们来验证下读取路径,安装 Grafana 对接 Loki:

$ helm pull grafana/grafana --untar

$ cd grafana

创建如下所示的 values 配置文件:

# ci/minio-values.yaml

service:

type: NodePort

nodePort: 32001

rbac:

pspEnabled: false

persistence:

enabled: true

storageClassName: local-path

accessModes:

- ReadWriteOnce

size: 1Gi

直接使用上面的 values 文件安装 Grafana:

$ helm upgrade --install grafana -n logging -f ci/minio-values.yaml .

Release "grafana" does not exist. Installing it now.

NAME: grafana

LAST DEPLOYED: Tue Jun 21 16:47:54 2022

NAMESPACE: logging

STATUS: deployed

REVISION: 1

NOTES:

1. Get your 'admin' user password by running:

kubectl get secret --namespace logging grafana -o jsonpath="{.data.admin-password}" | base64 --decode ; echo

2. The Grafana server can be accessed via port 80 on the following DNS name from within your cluster:

grafana.logging.svc.cluster.local

Get the Grafana URL to visit by running these commands in the same shell:

export NODE_PORT=$(kubectl get --namespace logging -o jsonpath="{.spec.ports[0].nodePort}" services grafana)

export NODE_IP=$(kubectl get nodes --namespace logging -o jsonpath="{.items[0].status.addresses[0].address}")

echo http://$NODE_IP:$NODE_PORT

3. Login with the password from step 1 and the username: admin

可以通过上面提示中的命令获取登录密码:

$ kubectl get secret --namespace logging grafana -o jsonpath="{.data.admin-password}" | base64 --decode ; echo

然后使用上面的密码和 admin 用户名登录 Grafana:

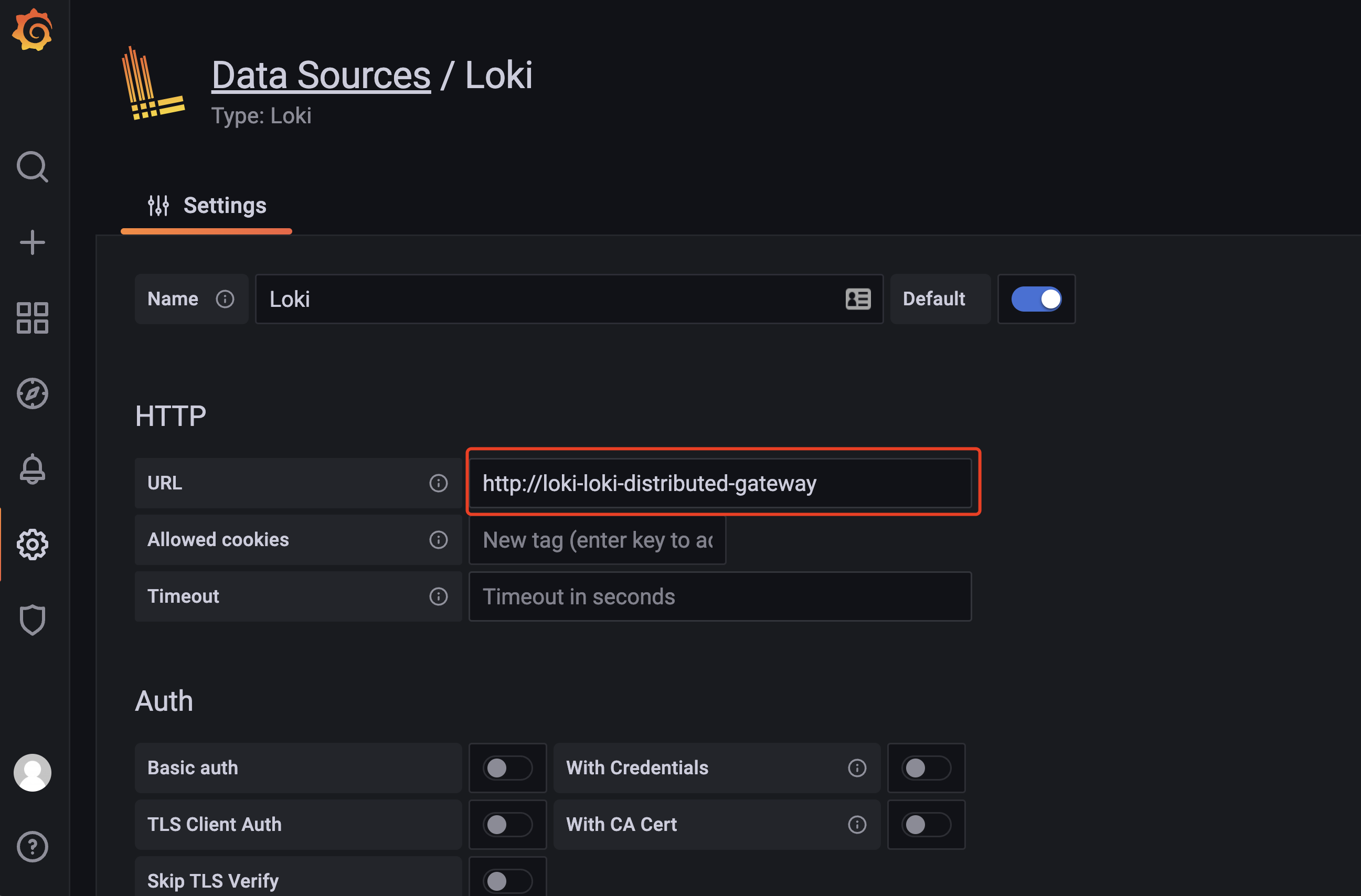

登录后进入 Grafana 添加一个数据源,这里需要注意要填写 gateway 的地址 http://loki-loki-distributed-gateway:

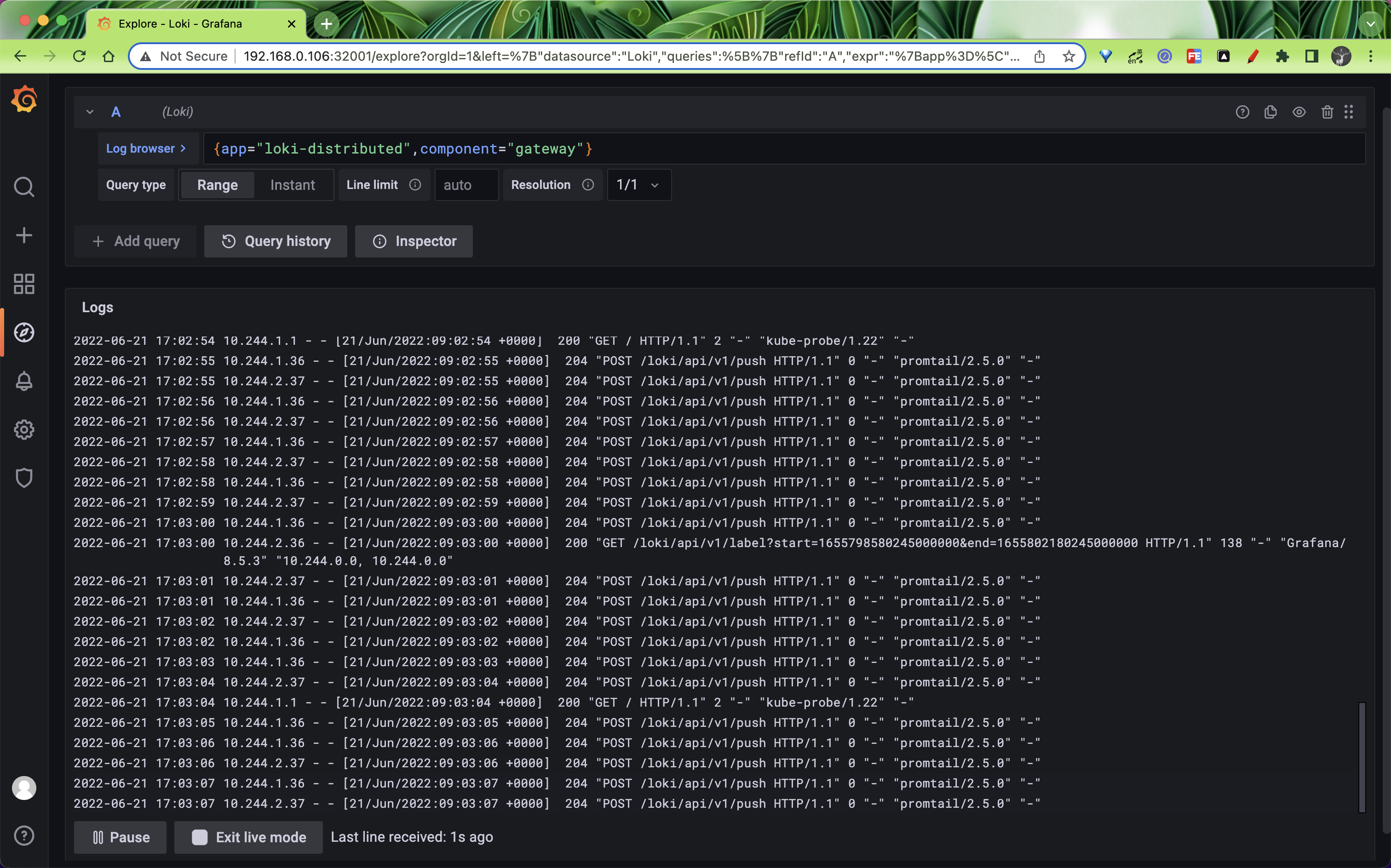

保存数据源后,可以进入 Explore 页面过滤日志,比如我们这里来实时查看 gateway 这个应用的日志,如下图所示:

如果你能看到最新的日志数据那说明我们部署成功了微服务模式的 Loki,这种模式灵活性非常高,可以根据需要对不同的组件做扩缩容,但是运维成本也会增加很多。

缓存¶

上面虽然我们完成了 Loki 的微服务模式部署,当在海量日志数据的情况下我们可能需要启用缓存来提升查询性能。这里我们使用的 grafana/loki-distributed 这个 Chart 包可以为 Loki 使用的各种缓存配置 Memcached 实例,所有缓存的配置都相同,但是目前该包中的 Memcached 缓存方式还有一些问题,我们这里就以 redis 为例来配置缓存。

首先当然需要一个可用的 redis 服务,直接使用下面的资源清单部署 redis 应用。

# loki-redis.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis

namespace: logging

spec:

selector:

matchLabels:

app: redis

template:

metadata:

labels:

app: redis

spec:

containers:

- name: redis

image: redis:4

resources:

requests:

cpu: 100m

memory: 100Mi

ports:

- containerPort: 6379

- name: redis-exporter # 它只会提供监控指标数据,主应用的metrics

image: oliver006/redis_exporter:latest

resources:

requests:

cpu: 100m

memory: 100Mi

ports:

- containerPort: 9121

---

kind: Service

apiVersion: v1

metadata:

name: redis

namespace: logging

labels:

app: redis

spec:

selector:

app: redis

ports:

- name: redis

port: 6379

targetPort: 6379

- name: prom

port: 9121

targetPort: 9121

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: redis

namespace: logging

spec:

endpoints:

- port: prom

selector:

matchLabels:

app: redis

部署完成后接下来我们为 Loki 集群添加 redis 缓存,定制如下所示的 values 文件:

# cache-values.yaml

loki:

config: |

auth_enabled: false

server:

http_listen_port: 3100

distributor:

ring:

kvstore:

store: memberlist

memberlist:

join_members:

- {{ include "loki.fullname" . }}-memberlist

ingester:

lifecycler:

ring:

kvstore:

store: memberlist

replication_factor: 1

chunk_idle_period: 30m

chunk_block_size: 262144

chunk_retain_period: 1m

max_transfer_retries: 0

wal:

dir: /var/loki/wal

limits_config:

enforce_metric_name: false

reject_old_samples: true

reject_old_samples_max_age: 168h

ingestion_burst_size_mb: 20

ingestion_rate_mb: 10

split_queries_by_interval: 24h

schema_config:

configs:

- from: 2020-09-07

store: boltdb-shipper

object_store: filesystem

schema: v11

index:

prefix: loki_index_

period: 24h

storage_config:

aws:

access_key_id: myaccessKey

bucketnames: loki-data

endpoint: minio.logging.svc.cluster.local:9000

insecure: true

s3forcepathstyle: true

secret_access_key: mysecretKey

boltdb_shipper:

shared_store: filesystem

active_index_directory: /var/loki/index

cache_location: /var/loki/cache

cache_ttl: 168h

filesystem:

directory: /var/loki/chunks

index_queries_cache_config:

redis:

endpoint: redis:6379

expiration: 1h

chunk_store_config:

max_look_back_period: 0

chunk_cache_config:

redis:

endpoint: redis:6379

expiration: 1h

write_dedupe_cache_config:

redis:

endpoint: redis:6379

expiration: 1h

query_range:

cache_results: true

results_cache:

cache:

redis:

endpoint: redis:6379

expiration: 1h

frontend_worker:

frontend_address: {{ include "loki.queryFrontendFullname" . }}:9095

frontend:

log_queries_longer_than: 5s

compress_responses: true

ruler:

storage:

type: local

local:

directory: /etc/loki/rules

ring:

kvstore:

store: memberlist

rule_path: /tmp/loki/scratch

alertmanager_url: http://alertmanager-main.monitoring.svc.cluster.local:9093 # alertmanager的地址

external_url: http://192.168.0.106:31918

distributor:

replicas: 2

ingester: # WAL(replay)

replicas: 2

persistence:

enabled: true

size: 1Gi

storageClass: local-path

querier:

replicas: 2

persistence:

enabled: true

size: 1Gi

storageClass: local-path

queryFrontend:

replicas: 2

gateway: # nginx容器 -> 路由日志写/读的请求

nginxConfig:

httpSnippet: |-

client_max_body_size 100M;

serverSnippet: |-

client_max_body_size 100M;

ruler:

enabled: true

kind: Deployment

replicas: 1

persistence:

enabled: true

size: 1Gi

storageClass: local-path

# -- Directories containing rules files

directories:

tenant_no:

rules1.txt: |

groups:

- name: nginx-rate

rules:

- alert: LokiNginxRate

expr: sum(rate({app="nginx"} |= "error" [1m])) by (job)

/

sum(rate({app="nginx"}[1m])) by (job)

> 0.01

for: 1m

labels:

severity: critical

annotations:

summary: loki nginx rate

description: high request latency

上面的 values 中我们为 Loki 添加了一些缓存配置,核心配置如下所示:

......

query_range:

results_cache:

cache:

redis:

endpoint: redis:6379

expiration: 1h

cache_results: true

storage_config:

index_queries_cache_config:

redis:

endpoint: redis:6379

expiration: 1h

chunk_store_config:

chunk_cache_config:

redis:

endpoint: redis:6379

expiration: 1h

write_dedupe_cache_config:

redis:

endpoint: redis:6379

expiration: 1h

......

直接使用上面的 values 重新覆盖 Loki 即可:

$ helm upgrade --install loki -n logging -f ci/cache-values.yaml .

安装完成后总体的 Pod 如下所示:

$ kubectl get pods -n logging

NAME READY STATUS RESTARTS AGE

grafana-55d8779dc6-mm5f2 1/1 Running 0 70m

loki-loki-distributed-distributor-77f8f99dbb-7snns 1/1 Running 0 38m

loki-loki-distributed-distributor-77f8f99dbb-rsn7l 1/1 Running 0 38m

loki-loki-distributed-gateway-6f4cfd898c-p9xxf 1/1 Running 2 (4h9m ago) 3d1h

loki-loki-distributed-ingester-0 1/1 Running 0 37m

loki-loki-distributed-ingester-1 1/1 Running 0 38m

loki-loki-distributed-querier-0 1/1 Running 0 38m

loki-loki-distributed-querier-1 1/1 Running 0 38m

loki-loki-distributed-query-frontend-78c4ccc99b-4t85w 1/1 Running 0 38m

loki-loki-distributed-query-frontend-78c4ccc99b-cgh8d 1/1 Running 0 38m

loki-loki-distributed-ruler-5654f8cf59-pjb8p 1/1 Running 0 38m

minio-548656f786-mjd4c 1/1 Running 3 (4h10m ago) 6d22h

promtail-ddz27 1/1 Running 1 (4h10m ago) 3d1h

promtail-lzr6v 1/1 Running 1 (4h10m ago) 3d1h

promtail-nldqx 1/1 Running 2 (4h4m ago) 3d1h

redis-7fb8ff6779-m26m6 2/2 Running 0 30m

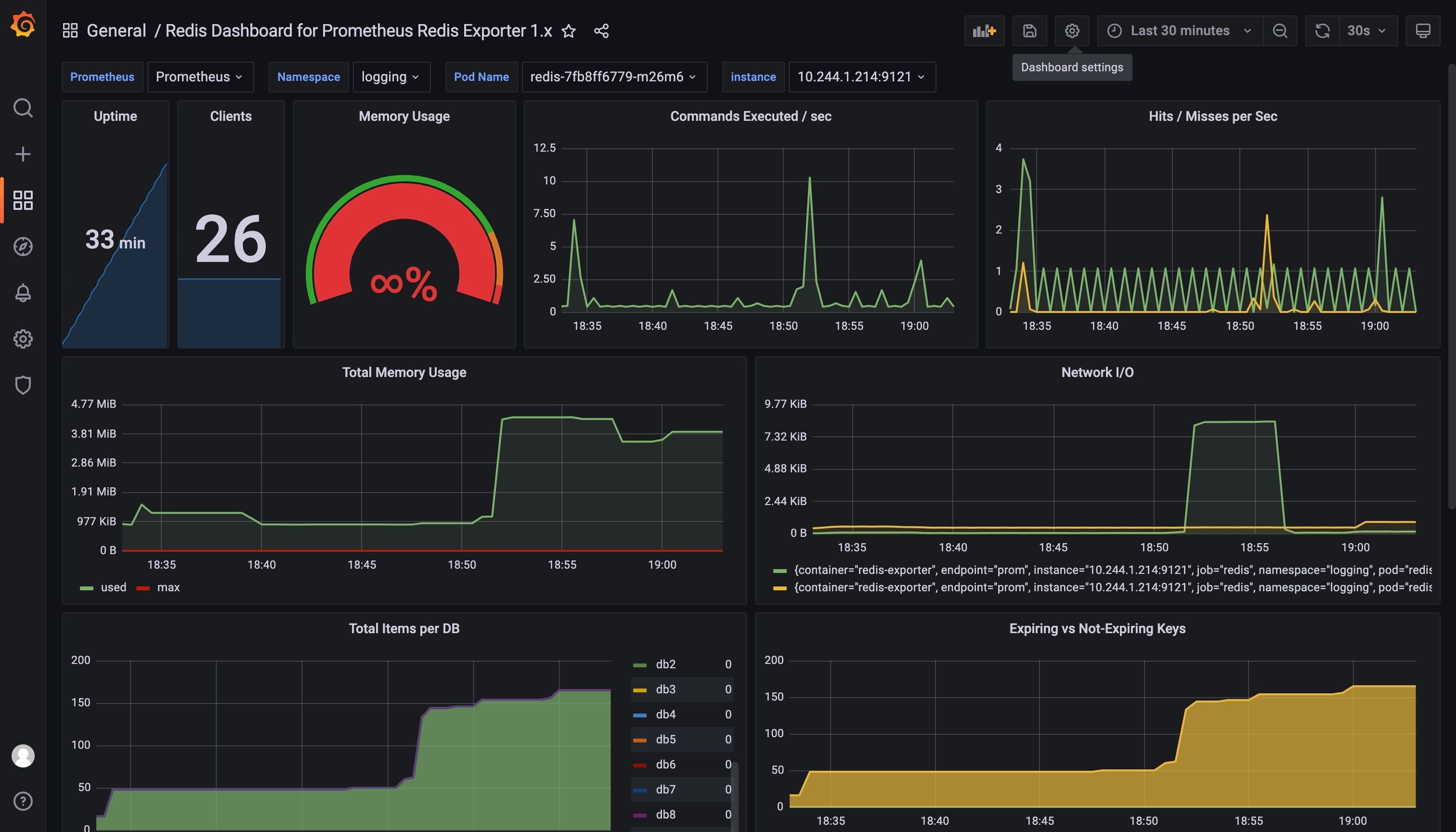

正常现在 Redis 服务就已经再使用了,下图展示了 redis 的运行状态,可以看出压力也并不大。